Sora-2 Video Models

The Sora-2 family from OpenAI is the next-generation video + audio generation model, enabling both text-to-video and image-to-video outputs with synchronized dialogue, sound effect, improved physical realism, and fine-grained control.

Explorez les Modèles Leaders

Sora-2 Image-to-video-pro Developer

OpenAI Sora 2 Image-to-Video Pro creates physics-aware, realistic videos with synchronized audio and greater steerability.

Sora-2 Text-to-video-pro Developer

OpenAI Sora 2 Text-to-Video Pro creates high-fidelity videos with synchronized audio, realistic physics, and enhanced steerability.

Sora-2 Image-to-video Developer

OpenAI Sora 2 generates realistic image-to-video content with synchronized audio, improved physics, sharper realism and steerability.

Sora-2 Text-to-video Developer

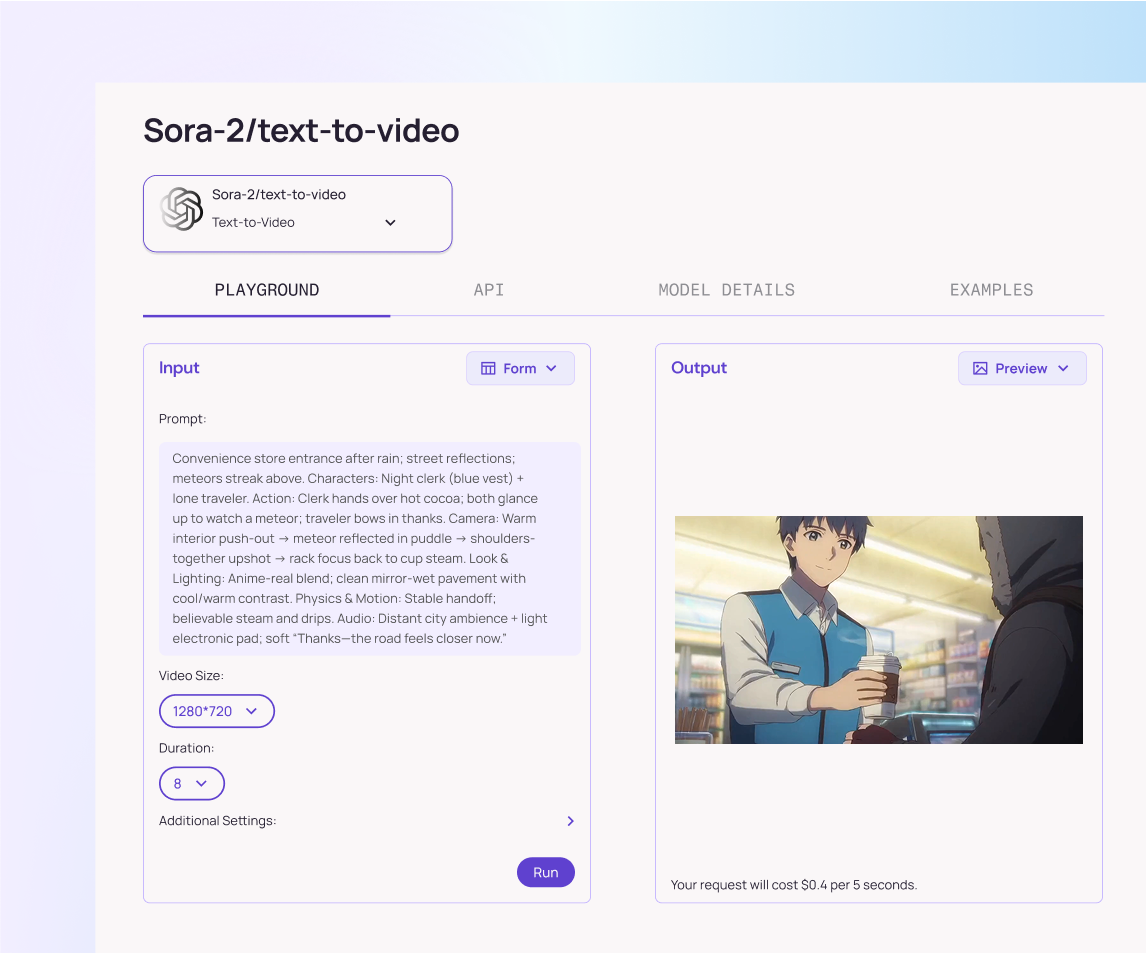

OpenAI Sora 2 is a state-of-the-art text-to-video model with realistic visuals, accurate physics, synchronized audio, and strong steerability.

Openai Sora-2 Text-to-video

OpenAI Sora 2 is a state-of-the-art text-to-video model with realistic visuals, accurate physics, synchronized audio, and strong steerability.

Openai Sora-2 Text-to-video Pro

OpenAI Sora 2 Text-to-Video Pro creates high-fidelity videos with synchronized audio, realistic physics, and enhanced steerability.

Openai Sora-2 Image-to-video

OpenAI Sora 2 generates realistic image-to-video content with synchronized audio, improved physics, sharper realism and steerability.

Openai Sora-2 Image-to-video Pro

OpenAI Sora 2 Image-to-Video Pro creates physics-aware, realistic videos with synchronized audio and greater steerability.

Ce Qui Se Démarque - Sora-2 Video Models

Real-World Physics

Simulates gravity, lighting, and object interactions with physical realism for life-like motion and reflections.

Synced Audio Generation

Produces ambient sounds, voices, and effects precisely matched to scene timing and motion.

Fine-Grained Control

Adjust pacing, cinematography, transitions, and tone directly through natural-language prompts.

Multi-Shot Narratives

Generates multi-scene sequences with coherent characters and environments in a single run.

Dynamic Camera Movement

Handles complex pans, zooms, and dolly shots with cinematic continuity and spatial consistency.

Rich Style Range

Supports diverse looks: from documentary realism to stylized animation, while preserving motion fidelity.

Ce Que Vous Pouvez Faire - Sora-2 Video Models

Generate high-fidelity videos directly from text prompts with synchronized sound, realistic motion, and cinematic framing.

Animate any still image into a dynamic scene, adding movement, depth, and atmosphere true to its original style.

Extend or re-edit existing clips to continue scenes, shift camera angles, or evolve lighting and action seamlessly.

Create visually consistent multi-shot sequences that maintain coherent characters, perspective, and tone across transitions.

Produce diverse visual styles, from photorealistic storytelling to painterly or abstract animation, with accurate sound design.

Generate complete audio-visual compositions ready for post-production, content creation, or rapid prototyping.

Pourquoi Utiliser Sora-2 Video Models sur Atlas Cloud

Combiner les modèles Sora-2 Video Models avancés avec la plateforme accélérée par GPU d'Atlas Cloud offre des performances, une évolutivité et une expérience développeur inégalées.

Experience Sora-2 on Atlas Cloud, where physics, continuity, and creativity come alive in motion.

Performance et Flexibilité

Faible Latence :

Inférence optimisée par GPU pour un raisonnement en temps réel.

API Unifiée :

Exécutez Sora-2 Video Models, GPT, Gemini et DeepSeek avec une seule intégration.

Tarification Transparente :

Facturation prévisible par token avec options serverless.

Entreprise et Échelle

Expérience Développeur :

SDK, analytiques, outils de fine-tuning et modèles.

Fiabilité :

99,99% de disponibilité, RBAC et journalisation conforme.

Sécurité et Conformité :

SOC 2 Type II, alignement HIPAA, souveraineté des données aux États-Unis.

Explorer Plus de Familles

Z.ai LLM Models

The Z.ai LLM family pairs strong language understanding and reasoning with efficient inference to keep costs low, offering flexible deployment and tooling that make it easy to customize and scale advanced AI across real-world products.

Seedance 1.5 Video Models

Seedance is ByteDance’s family of video generation models, built for speed, realism, and scale. Its AI analyzes motion, setting, and timing to generate matching ambient sounds, then adds creative depth through spatial audio and atmosphere, making each video feel natural, immersive, and story-driven.

Moonshot LLM Models

The Moonshot LLM family delivers cutting-edge performance on real-world tasks, combining strong reasoning with ultra-long context to power complex assistants, coding, and analytical workflows, making advanced AI easier to deploy in production products and services.

Wan2.6 Video Models

Wan 2.6 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. Wan 2.6 will let you create videos of up to 15 seconds, ensuring narrative flow and visual integrity. It is perfect for creating YouTube Shorts, Instagram Reels, Facebook clips, and TikTok videos.

Flux.2 Image Models

The Flux.2 Series is a comprehensive family of AI image generation models. Across the lineup, Flux supports text-to-image, image-to-image, reconstruction, contextual reasoning, and high-speed creative workflows.

Nano Banana Image Models

Nano Banana is a fast, lightweight image generation model for playful, vibrant visuals. Optimized for speed and accessibility, it creates high-quality images with smooth shapes, bold colors, and clear compositions—perfect for mascots, stickers, icons, social posts, and fun branding.

Image and Video Tools

Open, advanced large-scale image generative models that power high-fidelity creation and editing with modular APIs, reproducible training, built-in safety guardrails, and elastic, production-grade inference at scale.

Ltx-2 Video Models

LTX-2 is a complete AI creative engine. Built for real production workflows, it delivers synchronized audio and video generation, 4K video at 48 fps, multiple performance modes, and radical efficiency, all with the openness and accessibility of running on consumer-grade GPUs.

Qwen Image Models

Qwen-Image is Alibaba’s open image generation model family. Built on advanced diffusion and Mixture-of-Experts design, it delivers cinematic quality, controllable styles, and efficient scaling, empowering developers and enterprises to create high-fidelity media with ease.

Open AI Model Families

Explore OpenAI’s language and video models on Atlas Cloud: ChatGPT for advanced reasoning and interaction, and Sora-2 for physics-aware video generation.

Hailuo Video Models

MiniMax Hailuo video models deliver text-to-video and image-to-video at native 1080p (Pro) and 768p (Standard), with strong instruction following and realistic, physics-aware motion.

Wan2.5 Video Models

Wan 2.5 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. It delivers realistic motion, natural lighting, and strong prompt alignment across 480p to 1080p outputs—ideal for creative and production-grade workflows.

Uniquement chez Atlas Cloud.