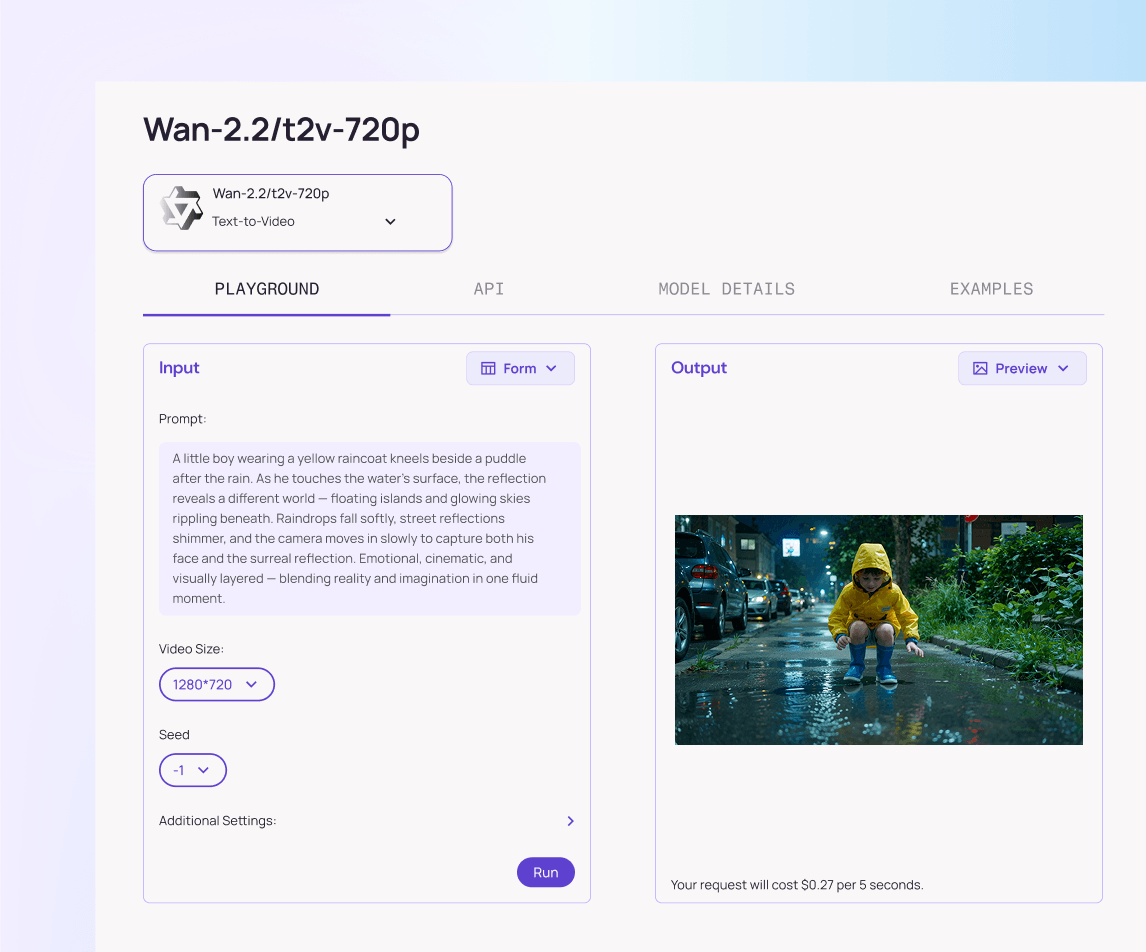

Wan2.2 Media Models

Wan 2.2 introduces a Mixture-of-Experts (MoE) architecture that enables greater capacity and finer motion control without higher inference cost, supporting both text-to-video and image-to-video generation with high visual fidelity, smooth motion, and cinematic realism optimized for real-world GPU deployment.

주요 모델 탐색

Wan-2.2 Animate

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 t2v 5b 720p Lora

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 5b 720p Lora

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 t2v 480p Lora Ultra Fast

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 720p Ultra Fast

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 720p Lora Ultra Fast

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 480p Ultra Fast

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 480p Lora Ultra Fast

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2-spicy Video Extend

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2-spicy Video Extend Lora

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2-spicy Image-to-video

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2-spicy Image-to-video Lora

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 t2v 480p Ultra Fast

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 t2v 5b 720p

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 5b 720p

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 480p

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 i2v 720p

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 t2v 480p

Open and Advanced Large-Scale Video Generative Models.

Wan-2.2 t2v 720p

Open and Advanced Large-Scale Video Generative Models.

주요 특징 - Wan2.2 Media Models

End-to-End Visual Generation

Create and transform images and videos from text, images, or existing clips in one unified model suite.

High-Fidelity Output

Maintain photorealistic detail across edits and animation.

Animate Images Naturally

Turn a single photo into smooth, coherent video with realistic motion and timing.

Creative Control

Edit with prompts, sketches, or styles at object level.

Multilingual Prompts

Understand English, Chinese, and more equally well.

Production Ready

Fast, cost-efficient, and API-ready for scale.

가능한 작업 - Wan2.2 Media Models

Generate realistic and cinematic videos directly from text or image prompts using Wan 2.2’s Mixture-of-Experts diffusion architecture.

Animate a still image into smooth, coherent video motion with strong temporal stability and expressive detail.

Transform input scenes or frames by changing style, lighting, or composition while preserving structure and motion consistency.

Produce high-quality videos efficiently with optimized inference and improved motion control compared to Wan 2.1.

Integrate Wan 2.2 models into creative, research, or production pipelines for controllable t2v and i2v generation.

Atlas Cloud에서 Wan2.2 Media Models을(를) 사용하는 이유

고급 Wan2.2 Media Models 모델과 Atlas Cloud의 GPU 가속 플랫폼을 결합하여 비교할 수 없는 성능, 확장성 및 개발자 경험을 제공합니다.

Reality in motion. Powered by Atlas Cloud, Wan 2.2 brings life, light, and emotion into every frame with natural precision.

성능 및 유연성

낮은 지연 시간:

실시간 추론을 위한 GPU 최적화 추론.

통합 API:

하나의 통합으로 Wan2.2 Media Models, GPT, Gemini 및 DeepSeek를 실행합니다.

투명한 가격:

Serverless 옵션을 포함한 예측 가능한 token당 청구.

엔터프라이즈 및 확장

개발자 경험:

SDK, 분석, 파인튜닝 도구 및 템플릿.

신뢰성:

99.99% 가동 시간, RBAC 및 규정 준수 로깅.

보안 및 규정 준수:

SOC 2 Type II, HIPAA 준수, 미국 내 데이터 주권.

더 많은 패밀리 탐색

Z.ai LLM Models

The Z.ai LLM family pairs strong language understanding and reasoning with efficient inference to keep costs low, offering flexible deployment and tooling that make it easy to customize and scale advanced AI across real-world products.

Seedance 1.5 Video Models

Seedance is ByteDance’s family of video generation models, built for speed, realism, and scale. Its AI analyzes motion, setting, and timing to generate matching ambient sounds, then adds creative depth through spatial audio and atmosphere, making each video feel natural, immersive, and story-driven.

Moonshot LLM Models

The Moonshot LLM family delivers cutting-edge performance on real-world tasks, combining strong reasoning with ultra-long context to power complex assistants, coding, and analytical workflows, making advanced AI easier to deploy in production products and services.

Wan2.6 Video Models

Wan 2.6 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. Wan 2.6 will let you create videos of up to 15 seconds, ensuring narrative flow and visual integrity. It is perfect for creating YouTube Shorts, Instagram Reels, Facebook clips, and TikTok videos.

Flux.2 Image Models

The Flux.2 Series is a comprehensive family of AI image generation models. Across the lineup, Flux supports text-to-image, image-to-image, reconstruction, contextual reasoning, and high-speed creative workflows.

Nano Banana Image Models

Nano Banana is a fast, lightweight image generation model for playful, vibrant visuals. Optimized for speed and accessibility, it creates high-quality images with smooth shapes, bold colors, and clear compositions—perfect for mascots, stickers, icons, social posts, and fun branding.

Image and Video Tools

Open, advanced large-scale image generative models that power high-fidelity creation and editing with modular APIs, reproducible training, built-in safety guardrails, and elastic, production-grade inference at scale.

Ltx-2 Video Models

LTX-2 is a complete AI creative engine. Built for real production workflows, it delivers synchronized audio and video generation, 4K video at 48 fps, multiple performance modes, and radical efficiency, all with the openness and accessibility of running on consumer-grade GPUs.

Qwen Image Models

Qwen-Image is Alibaba’s open image generation model family. Built on advanced diffusion and Mixture-of-Experts design, it delivers cinematic quality, controllable styles, and efficient scaling, empowering developers and enterprises to create high-fidelity media with ease.

Open AI Model Families

Explore OpenAI’s language and video models on Atlas Cloud: ChatGPT for advanced reasoning and interaction, and Sora-2 for physics-aware video generation.

Hailuo Video Models

MiniMax Hailuo video models deliver text-to-video and image-to-video at native 1080p (Pro) and 768p (Standard), with strong instruction following and realistic, physics-aware motion.

Wan2.5 Video Models

Wan 2.5 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. It delivers realistic motion, natural lighting, and strong prompt alignment across 480p to 1080p outputs—ideal for creative and production-grade workflows.

Atlas Cloud에서만.